Open-Ended Evolution for Minecraft Building Generation

This paper proposes a procedural content generator which evolves Minecraft buildings according to an open-ended and intrinsic definition of novelty. To realize this goal we evaluate individuals' novelty in the latent space using a 3D autoencoder, and alternate between phases of exploration and transformation. During exploration the system evolves multiple populations of CPPNs through CPPN-NEAT and constrained novelty search in the latent space (defined by the current autoencoder). We apply a set of repair and constraint functions to ensure candidates adhere to basic structural rules and constraints during evolution. During transformation, we reshape the boundaries of the latent space to identify new interesting areas of the solution space by retraining the autoencoder with novel content. In this study we evaluate five different approaches for training the autoencoder during transformation and its impact on populations' quality and diversity during evolution. Our results show that by retraining the autoencoder we can achieve better open-ended complexity compared to a static model, which is further improved when retraining using larger datasets of individuals with diverse complexities.

Authors

- Matthew Barthet | University of Malta

- Antonios Liapis | University of Malta

- Georgios N. Yannakakis | University of Malta

Published

July 8th, 2022

Indicates interactive elements

Contents:

I. Introduction

Early works on computational creativity approached the task of content generation by searching the problem space using evolutionary algorithms and an extrinsic objective function [1]. As the problem spaces and fitness functions become more complex, however, such algorithms tend to suffer from premature convergence to suboptimal solutions. To overcome this issue, modern creative artificial intelligence (AI) algorithms have shifted toward abandoning objectives altogether and using novelty search to reward solutions based on their diversity [2]. Whilst simple novelty search helps avoid the pitfalls of objective functions, it often still suffers from its own search biases and population convergence [3], and does not guarantee that solutions exhibit desired qualities. Open-endedness in evolution through novelty [4] is an important topic in computational creativity and artificial general intelligence, and is at the center of this project.

Recent works on achieving open-endedness in procedural content generation (PCG) have focused on using an intrinsic definition for novelty which is defined on the system's own output. Intrinsic motivation (IM) in artificial systems has been identified as an important factor for achieving higher forms of open-ended creativity, and in situations where the objective of the system is difficult to model [5].

The Deep Learning Novelty eXplorer (DeLeNoX) algorithm [3] approaches an IM novelty function by assessing novelty in terms of a higher-level representation, determined by an autoencoder (CNN). By allowing the generator to adjust its measure for novelty according to its own observations, DeLeNoX is able to continually adapt its focus to search beyond its current biases, a concept which is critical for achieving open-ended evolution. More recent works have applied this intrinsically motivated approach to a variety of tasks, such as 2D artifacts [6, 7], interesting behaviors in robots [8], and search space illumination [9]. Our approach builds upon DeLeNoX, expanding the algorithm on more complex 3D structures.

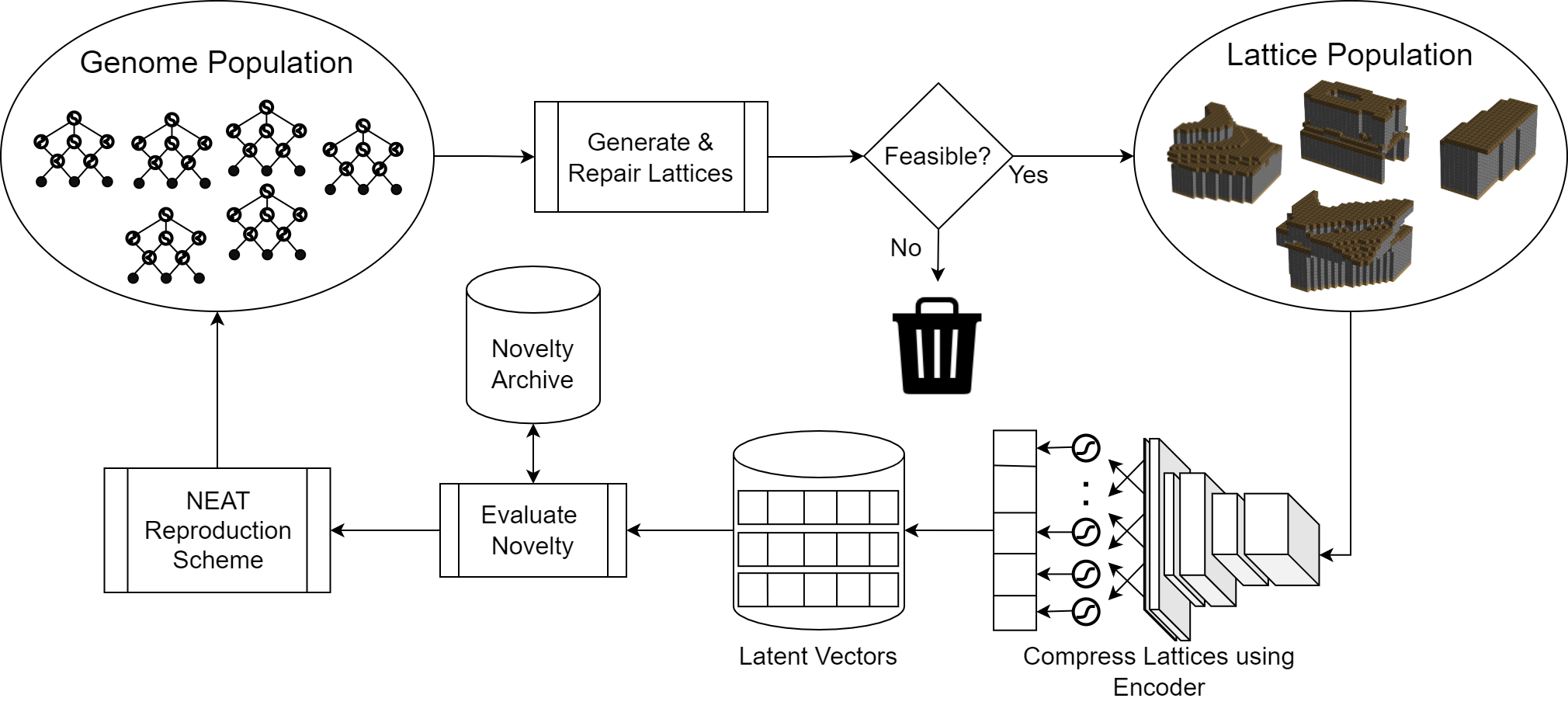

In this paper, we propose a computational system designed to autonomously create interesting Minecraft buildings using an intrinsic and open-ended definition of novelty. Sandbox games such as Minecraft [10] are arguably the perfect canvas to illustrate an artificial system's creativity: their open-ended gameplay allows the player to create any structure that can be expressed as a set of voxels. On a high level, the system alternates between phases of exploration and transformation. During exploration, the latent space defined by the current autoencoder is explored as thoroughly as possible by applying constrained novelty search [2] to neuroevolution [11]. Repair functions ensure individuals abide by a set of basic rules for buildings, in place of an objective function. During transformation, the most novel individuals from each population form a training set to retrain the autoencoder, modifying the latent space (and distance function) and opening up new areas of the solution space to explore. The new autoencoder is used for the next iteration of the algorithm, which can continue until stopping criteria are met.

Our findings suggest that redefining the latent space using novel data with a diverse range of structural complexity improves the generator's ability to find more complex and novel features in its output. To our knowledge, this approach remains untested for generating complex 3D structures such as Minecraft buildings. Current research into PCG for Minecraft focuses on the goals laid out in the Generative Design in Minecraft Competition [12] to design settlements and buildings, without emphasizing creativity in the generation process. The buildings generated are often static, rule-based systems that do not involve any optimization methods at all. We position this work to fill in this space and potentially inspire more creativity-focused building and/or settlement generators.

II. Related Work

This section provides a brief overview of computational creativity and its link to PCG and games, as well as a look at existing work on assessing novelty in the latent space, and a summary of the state of PCG in Minecraft.

II-A. Computational Creativity and Games

Computational creativity refers to the study of computer systems which generate content or behave in a manner that an unbiased observer would consider creative, whilst being capable of challenging humans on both a creative and scientific level [13]. Whilst early work in this field targeted PCG in isolated, single-faceted domains such as music [14] and stories [15], games have quickly become recognized as the cutting-edge application for the study of computational creativity [16]. This is due to their content-intensive and multi-faceted nature, which provide rich interactions with the player. Creative systems can also be used as mixed-initiative tools alongside humans [17, 18], helping them to understand and promote the creative process [19].

A critical concept in computational creativity—and artificial life—is open-endedness (OE). Open-ended creativity can be informally defined as the ability of a biological or artificial system to keep producing novelty and complexity without exhaustion [4]. This definition can be further broken down into three classes (exploratory, expansive, transformative) according to the extent to which the system must redefine its internal models and its ability to alter the boundaries of the search space to explore new regions and/or dimensions [20]. Modelling and evaluating OE is an active research topic in this field [21]. Our approach to evaluating novelty in the latent space does not fit ideally with the three classes mentioned above. Whilst our generator could be said to produce expansive novelty in the latent space through transformation, the boundaries and dimensions of the search (phenotype) space remain static, meaning it can only achieve exploratory novelty in the problem space. Achieving higher forms of OE in the phenotype space could involve exploiting the phenotype resolution (expansive) and availability of materials (transformative) to open up new dimensions of the search space.

Intrinsic motivation (IM) refers to an individual's desire to explore new behaviors out of internal curiosity in the outcome [22], acting freely without a separate motive or external influence [23]. IM in artificial systems has been used in situations where the objective is difficult to define, in situations where transformative open-ended novelty is desired, and as a method for increasing creative autonomy [5]. These properties directly align with our goals for implementing a Minecraft building generator with open-ended complexity and creativity. Our proposed generator periodically redefines its novelty function according to interesting individuals it has generated in the past (without any external influence), satisfying the definition for IM given above. Through our evaluation, we discuss the issues and benefits of largely ignoring the quality (extrinsic factors) of candidates and solely focusing on their novelty as fitness.

Within games, procedural content generation (PCG) is commonly tackled as a search-based task [1], treating the problem as an optimization task by evolving a population of artifacts according to a desired fitness function which could involve aspects of gameplay behavior or experience [24]. PCG for quality-diversity [25] is a recent search-based approach that uses quality-diversity algorithms (e.g., MAP-Elites [26] with novelty search [2] or surprise search [27]) to preserve diversity in the behavior space while improving their performance according to a desired fitness function. On the other hand, PCG via Machine Learning (PCGML) [28] uses machine-learned models trained on existing game content to generate new data, removing the need for complicated code to express the designer's intentions for the target domain. The models created through PCGML algorithms can be used to recognize and repair infeasible components of individuals [29], predict properties of their content [30], and compress the content into fewer dimensions using an autoencoder [31]. We cover uses of PCGML and other algorithms specifically for Minecraft in section II-C. Our approach combines the evolutionary principles of PCG-QD by targeting novelty in the latent space and ensuring quality through constraints, while also leveraging learned representations not for generation directly—as in PCGML—but for the evaluation of novelty in a search-based paradigm.

II-B. Novelty in the Latent Space

Assessing an individual's novelty through its latent representation is a viable method for achieving open-endedness (OE) as the boundaries of the latent space can be expanded by retraining the encoder model. Furthermore, through this approach, novelty is calculated on the high-level patterns encoded in the latent representation, providing a more meaningful measure regarding the (current) possibility space. The deep-learning novelty explorer paradigm [3] (DeLeNoX) leverages this concept by cycling between phases of exploration and transformation. In exploration, the generator uses the current autoencoder to thoroughly search the current latent space. In the first implementation of DeLeNoX [3], this was accomplished through neuroevolution of 2D spaceships with constrained novelty search [32] in the latent space. During transformation, the autoencoder is retrained using the latest novel individuals from the previous exploration phase. This effectively readjusts the boundaries of the latent space and opens up new potential areas of interesting individuals, improving the generator's ability to conduct an open-ended search.

The high-level approach of DeLeNoX has since been adapted and extended for a number of other use cases. A similar study to DeLeNoX generates interesting 2D shapes using variational autoencoders [6], demonstrating its effectiveness in generating diversity over directly evolving individuals' latent vectors. Similar methods have been used for the autonomous discovery of novel robot behaviors [8], data-efficient search space illumination [9], the generation of interesting images [7], and Super Mario Levels [33] with open-ended novelty or other diversity measures. Another DeLeNoX-based approach has been used for visual DOOM playing using neuroevolution and a latent representation of raw pixel data [34]. To the best of our knowledge, the core principles of DeLeNoX—as explored in this paper—have never been applied to generate novel 3D content such as Minecraft buildings.

II-C. Minecraft Settlement Generation

Minecraft's open-ended, sandbox design makes it a brilliant canvas for players and computers to express creative behavior by building structures that adapt to the world around them [10]. Given these strengths, it has become a popular testbed for research, most notably in the Generative Design in Minecraft Competition (GDMC) [12]. The GDMC encourages people to design AI programs for Minecraft that achieve human-like creativity. The task presented by the competition is to develop an autonomous Minecraft settlement generator that produces functional settlements capable of adapting to their environment while remaining aesthetically pleasing and evoking an interesting narrative to the viewer. Due to the complexity of these challenges, the first round of contestants did not focus on the creativity of the individual buildings, opting for simpler rule-based constructive generators [35].

Content generators have been developed for Minecraft outside the objectives related to the GDMC. The chronicle challenge addresses computational storytelling by requiring participants to generate meaningful stories for their settlements [36]. Cellular automata have been used to create realistic buildings with a basic design for the interior volume [37]. Neural cellular automata have been used to create structures such as caves, buildings, and trees with increasing complexity and the ability to regenerate and repair themselves [38]. The generation of the world itself has also been researched through works such as World-GAN, which attempts to address the shortcomings of the static world generator bundled with Minecraft through generative adversarial networks [39]. We position this work to fill the gap in the research space for interesting and creative buildings for Minecraft settlements.

III. Methodology

This paper proposes an autonomous Minecraft building generator with open-ended creativity and complexity by evaluating novelty in the latent space. We adapt the core principles of DeLeNoX [3] for this task, describing our approach to building representation and generation in Section III-A, the exploration phase in Section III-B, and the transformation of the autoencoder in Section III-C.

III-A. Representation and Generation

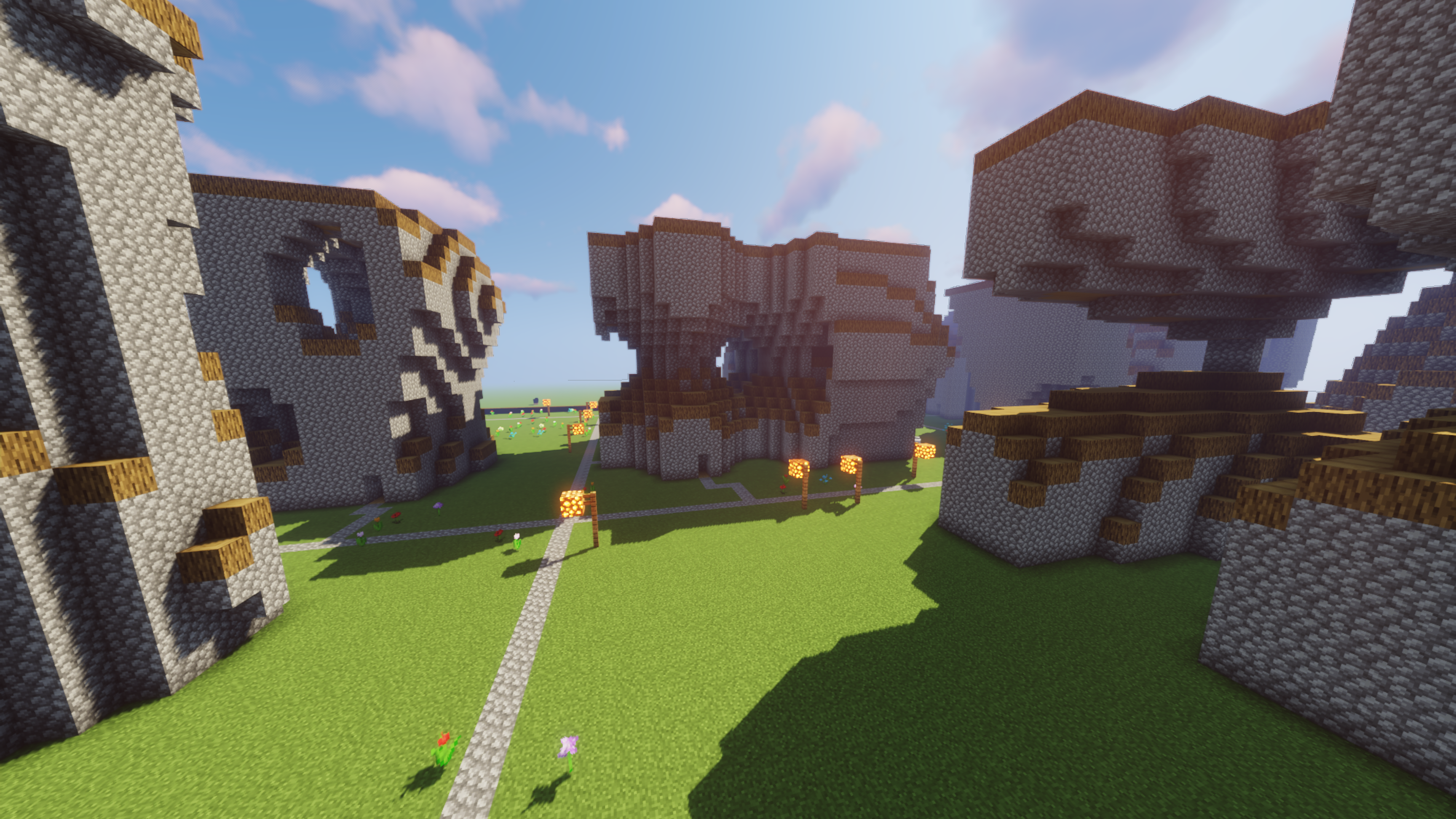

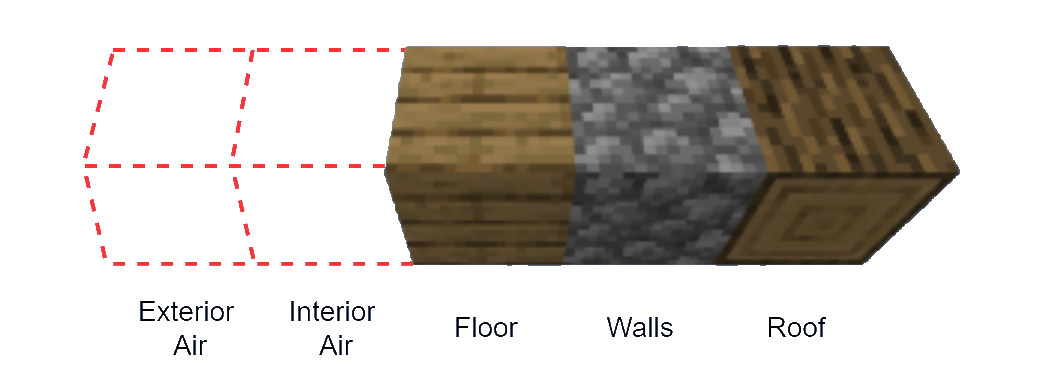

In our approach, we represent candidate solutions as three-dimensional arrays of voxels: each voxel encodes a material ID for its location. This aligns with Minecraft's in-game representation of data and allows us to transfer the content generated directly to the game using useful tools such as MCEdit [40], an example of which can be seen in Figure 1. It should be noted that our representation does not directly use Minecraft materials such as wood and stone but rather encodes architectural properties of the space that are needed for the evaluation of the building. We provide the mapping of our voxel types to Minecraft materials for generating in-game models in Figure 2. The material in each voxel is represented as a one-hot encoded vector of binary values. The chosen resolution for the lattices was 20x20x20 voxels split into 5 channels (one for each material), similarly to how an RGB image is represented. The resolution of the lattices and number of materials available to the generator were kept constant and could not be altered during the evolutionary process.

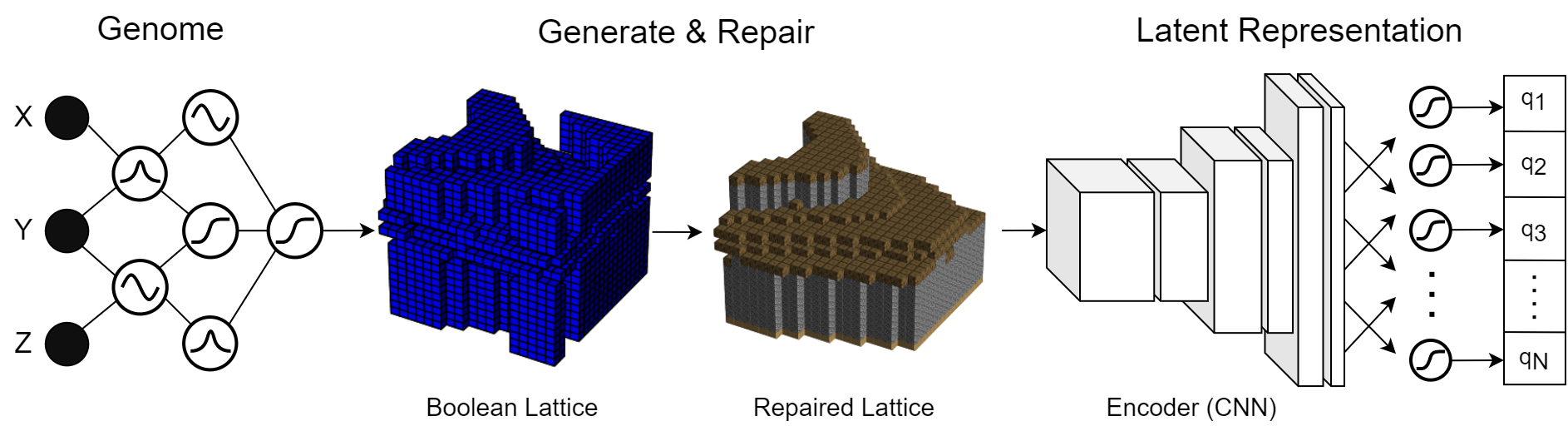

The genetic representation for individuals is a critical design decision when creating search-based content generators, especially when aiming for open-ended evolution, as this choice can limit the maximum complexity achievable. We use compositional pattern producing networks (CPPNs) to encode the 3D lattice [41], due to their ability to generate aesthetically pleasing structures. CPPNs are also capable of encoding a phenotype of any resolution, providing an ideal balance between representational power and strong computational efficiency. CPPNs have been proven useful for the constrained evolution of 3D voxel-based structures for robot control [42]. The CPPN iterates over all 3D coordinates of the lattice (using x, y, z as input), and its output is a boolean value that determines whether the voxel should be filled in that location in the lattice. Figure 3 depicts the process of generating lattices from genomes, ensuring they follow certain rules through repair and constraints, and compressing them into a latent vector using an encoder. The autoencoder is fed the material lattice, rather than the boolean lattice, to ensure material data is encoded in the latent vector and considered when assessing the novelty of a structure.

As noted above, the output of the CPPN is a boolean lattice of empty or filled voxels (the architectural ``hull'' of the building). A set of repair functions remove problematic sections of this boolean hull and convert it into the material lattice (with 5 materials described above). Repair functions are applied in sequence: first, any floating voxels (i.e., not indirectly attached to a floor voxel) are removed using a flood-fill algorithm. Flood-fill works by picking a starting point from the list of floor voxels and recursively visiting its neighbors in all directions, marking solid voxels and ignoring empty voxels. This process is repeated until every floor voxel has been flooded from, discarding the solid voxels not visited during the process. Next, only the largest detected structure is kept, removing any voxels detached from this main structure.

After the repair process, materials are assigned to each filled voxel based on a rule-based system in the following order: a voxel at the absolute bottom of the coordinate system becomes floor, a voxel with an empty voxel above it becomes roof, voxels surrounded on all sides by filled voxels become interior air, finally any remaining empty voxels are marked as exterior air and remaining filled voxels become walls. This process also carves out the interior of the structure, marking the voxels as interior air which is a helpful distinction for tracking the interior volume of the building. A final constraint is checked at this point: a structure must contain an valid location for an entrance. This is checked by iterating over the floor voxels and checking if there is a wall at least three voxels high for an entrance (in any of four possible rotations) adjacent to interior air voxels to ensure the area is traversable.

III-B. Exploration

The role of the exploration phase is to thoroughly search the current boundaries of the latent space for interesting candidate solutions. Our approach to this phase follows DeLeNoX, where neuroevolution of augmenting topologies (NEAT) is used to evolve several populations of CPPNs using constrained novelty search in the latent space [43]. The use of NEAT is ideal for open-ended evolution as it is capable of producing unbounded genetic complexity while also preserving genetic diversity through speciation. During exploration, the generator runs NEAT on a set of separate populations and the current autoencoder.

At the start of each generation of NEAT, the process described in Section III-A is executed to create a population of lattices from the genome pool. Due to our single constraint, any individuals that fail it are discarded and replaced during the next round of reproduction. The feasible lattices are compressed using the current autoencoder (as described in Section III-C resulting in a second `snapshot' of the population containing their latent vectors. These latent vectors are used to calculate the novelty scores for each individual which is done using the average Euclidean distance to the $k$-nearest neighbors in the latent space (Eq. 1).

$$ \begin{equation} \label{eq:novelty} n(i) = \frac{1}{k} \sum^{k}_{m=1} \sqrt{ \sum^{D}_{n=1} (q_n(i) - q_{n}(\mu_m))^2} \end{equation} $$

where $n(i)$ is the novelty score of individual $i$, $k$ is the number of neighbors in the current population and the novel archive that are considered for novelty ($k=15$ in this paper), $D$ is the number of dimensions in the latent vector and $q_n(i)$ is the value of the latent vector at position $n$ when individual $i$ is provided as input to the autoencoder.

A novelty archive is maintained for each population to ensure current individuals are compared to previous novel examples. At the end of each generation of NEAT, the $\alpha$ most novel individuals are inserted into the novelty archive provided they are unique (no identical matches in the archive). The novelty archive contains both the lattice and latent representation of novel individuals, as these will be needed during transformation. At the end of each generation, we record a number of statistics on the properties of buildings generated (bounding box size, symmetry, instability and surface area) for our evaluation. These properties could be used to implement more complex feasibility constraint functions in the future.

III-C. Transformation

As shown in Eq. (1), exploration takes place in the latent space by calculating the novelty scores based on each individual's latent vector. While this exploration is capable of effectively searching the current latent space for novel individuals, it is restricted by the boundaries of the manifold. These boundaries are caused by the model that compresses the lattices into latent vectors, which in our case takes the form of a 3D convolutional autoencoder. Through exploration alone, the generator is only capable of achieving exploratory novelty [20], limiting its ability to discover new features in the structures of buildings. By assessing individuals' novelty in the autoencoder's latent space rather than in the lattice space, the generator can shift the boundaries of its current search space by retraining the autoencoder model using individuals it has previously identified as interesting (novel). This results in an intrinsically motivated reward function that is more capable of achieving higher forms of open-endedness (expansive, transformative) compared to a static definition of novelty.

When compressing a 20x20x20 lattice into a 1D vector of 256 values, the encoder model consists of three alternating layers of convolution (of size 3x3x3) and downsampling (of size 2x2x2) which can be seen in Figure 3. The weights of this model are assigned during training and are directly responsible for how individuals assess novelty. During transformation, we retrain the encoder model using a training set of individuals taken from the previous exploration phase(s). This retraining process is critical for achieving higher forms of open-endedness, as the new weights of the encoder shift the boundaries of the search space and open up new areas for potentially interesting individuals. From a technical perspective, the retraining allows the encoder to better identify high-level patterns in the building's structure, improving its ability to accurately compress more complex structures.

Transformation begins by retrieving novel individuals from each population of the previous exploration phase(s) and can be done in one of two ways: using the most novel individuals from each population of the previous exploration phase(s), or using the novelty archives of each population. Both approaches ensure that the autoencoder is transformed using interesting content only, which helps to better guide the next exploration phase towards interesting areas of the search space. The two approaches differ in that the former assembles training sets from the final populations of the exploration phase(s), while the latter contains individuals deemed novel throughout evolution. The autoencoders are retrained from scratch using the assembled training set using the categorical cross-entropy loss function [44]. The novelty archives collected so far are updated using the new encoder, assigning new latent vectors for past novel individuals as this will impact how novelty finds neighbors in the archive via Eq. 1.

IV. Experimental Protocol

Assessing the creativity and open-endedness of content generators and the quality of their products is challenging due to the subjectivity of such notions. Since novelty scores are not directly comparable across experiments, we assess the diversity of the structures generated by comparing them at a voxel level. We use Kullback-Leibler (KL) divergence as our metric for assessing voxel diversity, which has proven efficient for comparing game levels [45,33]. We also measure the correlation between this KL divergence measure and each experiment's distance measure in the latent space, as this provides insight into the regularization of the latent space and the novelty function's ability to group meaningfully similar individuals together. We also evaluate the reconstruction accuracy of the autoencoders, which directly quantifies the model's ability to identify high-level patterns in the buildings and therefore discover more meaningful novel features. Finally, we provide a qualitative comparison between experiments by visualizing the structures generated and observing the differences in complexity and patterns found to be novel.

Since our method focuses on the transformation of the search space through a latent vector when assessing novelty, the experiment explores different ways of training the autoencoder (AE) and includes two baselines. The first baseline is a static AE which was trained on the seed populations (which are common across all experiments) and is not retrained during the transformation phase. While all methods in this paper use the static autoencoder for their first exploration phase, every method except static uses a new autoencoder after the first transformation phase. The static AE baseline tests whether retraining the autoencoder has any impact on its own compressibility or the quality of generated results. The second baseline is the random AE, wherein each transformation phase a new autoencoder is generated with random weights and is used as-is for the following exploration phase. This baseline tests whether guiding novelty search based on the patterns of the past buildings created has any impact (compared to an untrained autoencoder). The remaining three methods use different training sets during the transformation phase. The novelty archive AE (NA-AE) combines all novelty archives from each population in the previous exploration phase to form the training set for the autoencoder. The latest set AE (LS-AE) combines only the 100 most novel final individuals of the populations in the previous exploration phase into a training set of 1000 individuals, while the full history AE (FH-AE) combines the final individuals in every population of every exploration phase so far to train the autoencoder (1000 individuals multiplied by the number of phases so far). The three tested methods are designed to assess the importance of all previous exploration steps versus only the last one, and whether maintaining a history of the interim novel results during evolution would yield more robust models.

The experiments were run for 10 iterations of the algorithm (exploration phase followed by transformation phase), evolving 10 separate populations of 200 individuals each. Each exploration phase runs 100 generations of CPPN-NEAT, and transformation retrains the autoencoder for 100 epochs, with a batch size of 64, the ``Adam" optimizer and categorical cross-entropy loss function. Novelty was calculated using the average Euclidean distance to the 15 nearest neighbors in the latent space, and up to 3 individuals are inserted into the novelty archive per generation. For these experiments, autoencoders were trained to compress the $20{\times}20{\times}20{\times}5$ lattices into latent vectors of 256 real values. The first iteration of each experiment uses the same set of seed populations (randomly initialized CPPNs with no hidden nodes) which are used to pre-train an autoencoder (the static autoencoder used as baseline). The first exploration phase uses this trained autoencoder and the seed populations to start the process described in this section. This ensures that whilst there is an element of stochasticity to the exploration and transformation processes, all the experiments start from a common population set and encoder model.

V. Results

This section attempts to summarize the findings of the experiments described in Section IV, focusing on the autoencoders' reconstruction accuracy experiments (V-A), the diversity of the final evolved buildings (V-B), and finally providing a brief qualitative comparison between experiments (V-C).Reconstruction Error

Table 1 shows the reconstruction error of the final autoencoders of each experiment, when they reconstruct the final populations of each experiment (and, in the Overall column, all final populations produced by all five methods). On the one hand, we are interested in the ability of the autoencoder to accurately reconstruct individuals evolved based on this autoencoder's notion of novelty (i.e. when AE and pop. are the same). On the other hand, we want to know whether some autoencoders are better at reconstructing buildings in general (i.e. individuals produced by other experiments) as this would indicate that either the autoencoder is more powerful and generalizable or that the buildings produced by different autoencoders and experiments share similar patterns.

| AE \ Pop. | Experiment | Overall | ||||

|---|---|---|---|---|---|---|

| Static | Random | Latest Set | Full History | Nov. Archive | ||

| Static | 10.27 ± 0.82 | 16.03 ± 2.77 | 14.00 ± 0.49 | 15.73 ± 0.64 | 15.5 ± 1.52 | 14.31 ± 2.0 |

| Random | 92.61 ± 0.11 | 92.05 ± 0.62 | 94.59 ± 0.25 | 94.65 ± 0.3 | 92.45 ± 0.3 | 93.27 ± 1.0 |

| Latest Set | 21.57 ± 1.15 | 26.42 ± 2.92 | 5.93 ± 0.24 | 12.68 ± 1.07 | 20.26 ± 1.15 | 17.37 ± 6.0 |

| Full History | 7.19 ± 0.65 | 13.08 ± 3.00 | 5.07 ± 0.27 | 4.01 ± 0.34 | 12.06 ± 1.34 | 8.28 ± 3.0 |

| Nov. Archive | 7.91 ± 0.49 | 11.92 ± 2.18 | 9.97 ± 0.39 | 11.56 ± 0.67 | 10.41 ± 0.90 | 10.35 ± 1.0 |

Results in Table 1 indicate that the full history autoencoder (FH-AE) is not only able to reconstruct its own populations more accurately, but is also able to reconstruct all other sets of generated buildings from all experiments (see Overall column). Moreover, it is interesting to note that the latest set autoencoder (LS-AE) is able to reconstruct LS populations, but struggles when facing populations generated by all other methods. Interestingly, the reverse is true with the novelty archive autoencoder (NA-AE) which has comparable or lower reconstruction errors in other populations than the ones it was evolving. While the random (untrained) autoencoder understandably performs very poorly, the static autoencoder is surprisingly robust even when reconstructing the latest populations regardless of experiment. This may indicate that the patterns of the CPPNs that produce the buildings remain concise, regardless of the CPPNs' augmented topologies at later stages. On the other hand, buildings evolved using the static autoencoder are also fairly predictable, as their reconstruction error is low for both the FH-AE and NA-AE.

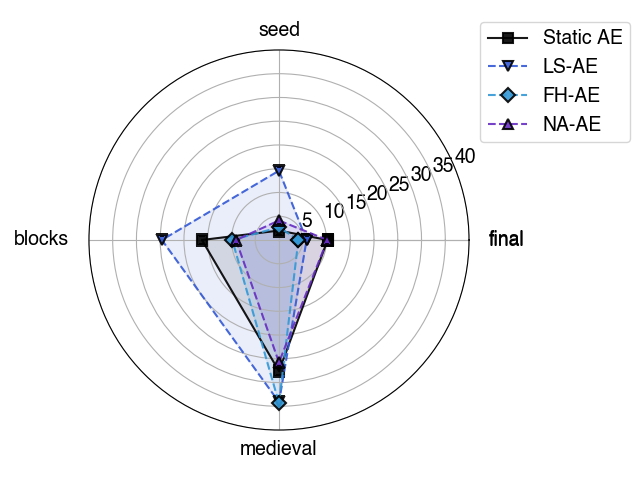

Figure 5 shows the same reconstruction error measure tested across four different datasets to visualize the autoencoders' accuracy across a variety of inputs. The seed and final populations refer to populations at the start (before evolution) and end (end of 10th exploration phase) of each respective experiment. The "Cubes" dataset consists of 200 buildings made by randomly generating cuboid hulls of different sizes and applying the repair pipeline to produce material lattices. The "Medieval" dataset consists of a population of buildings generated using the "AHouseV5" filter by Adrian Brightmoore [46] in MCEdit, re-assigning the materials for each voxel through the repair pipeline. Unsurprisingly, the random AE performs very poorly across all four datasets, followed by LS-AE, which struggled to reconstruct anything except its own final population. The NA-AE proved to be the most robust model when given completely unseen data, displaying the best reconstruction accuracy for the Medieval and Cubes datasets. As we have seen in Table 2, the FH-AE shows the best performance on its final populations, though (like the static AE) struggled slightly compared to NA-AE on completely unseen data. The NA-AE seems to benefit from having the largest amount of (and most diverse) training data for transformation compared to the rest of the experiments.

KL-Divergeance

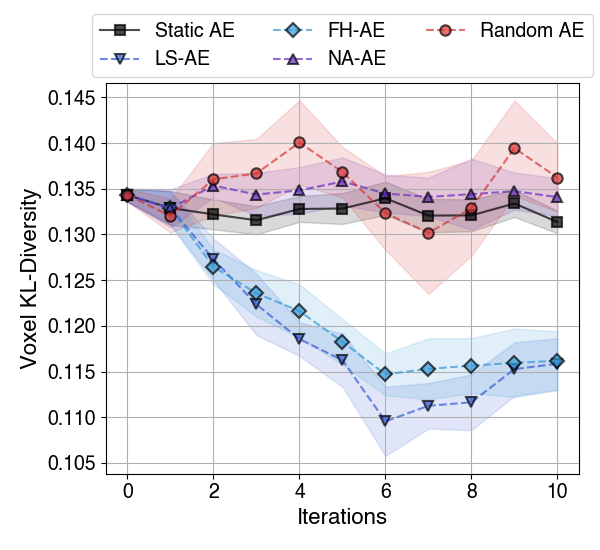

Figure 6a shows the progression of the average KL divergence for the populations of each experiment. This was calculated in the voxel space and measures the average KL divergence of each individual to the rest of the population. The results show that FH-AE and LS-AE produce less diverse content in the voxel space over time compared to the rest of the experiments. On the other hand, the static AE produces more diverse content in the voxel space, without varying over time; this is expected as the autoencoder is not retrained between exploration phases. Interestingly, the NA-AE produces a similar trend to the static AE even though it is trained on the largest dataset of individuals during transformation. The random AE produces marginally more diverse content, albeit with a larger deviation which is likely caused by the randomized weights of the autoencoder. It is worth noting that while the LS-AE and FH-AE populations trend downward in voxel-based diversity, it does not in itself mean that the experiments are not generating novel content in the latent space.

Figure 6b depicts the evolution of the average KL divergence from the initial seeds (before evolution starts). Similar to the previous metric, this was calculated by computing the average KL diversity of each individual to every individual in the corresponding seed population. The results show that whilst the LS-AE and FH-AE produced the least diverse individuals compared to each other, these same individuals were the most diverse from the initial seeds. The static AE produced the least diverse content for this metric with the least variation over time, indicating that without retraining the autoencoder NEAT struggles to produce significantly different content over time (poor open-endedness). The NA-AE tends to marginally increase in diversity from the seed populations over time, though not to the extent of LS-AE and FH-AE. The random AE produced the most inconsistent trend which is to be expected due to the lack of training for the autoencoder during the transformation phase.

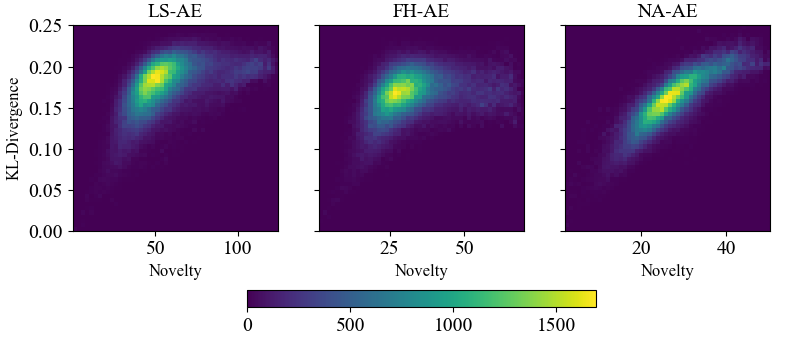

While autoencoders were trained solely to minimize reconstruction error (i.e., without an explicit regularization component), we visualize the correlation between the novelty score in the latent space and voxel KL divergence in Figure 7. A regularized latent space is desirable as it ensures similar phenotypes (buildings) are grouped together in the latent space whilst dissimilar individuals are distanced appropriately. This ensures the validity of the autoencoder's distance measure, as identifying novel individuals in the latent space will result in more meaningfully different individuals in the phenotype space. There is a clear linear correlation between the two diversity measures for the NA-AE experiment's final populations, with a Pearson correlation of 0.84. However, the LS-AE and FH-AE distance functions both produce significantly weaker correlations between the two measures, with a Pearson correlation of 0.53 and 0.35 respectively.

Qualitative Comparison

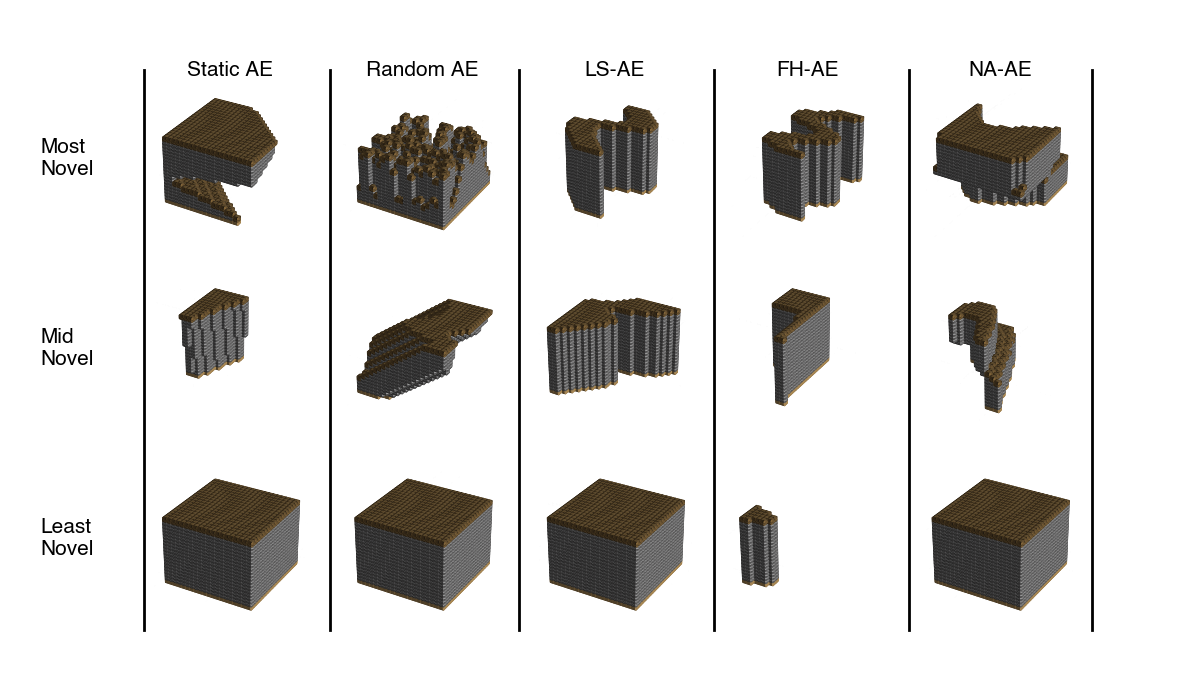

Figure 8 depicts the individuals containing the minimum, median, and maximum novelty from each experiment's final populations (and seed population for comparison). By looking at these examples, we can get a qualitative idea of how novelty and complexity are evolving over time. The seed population and static AE share similar high-level patterns, which is understandable given they use the same autoencoder and originate from the same latent space. The effect of the lack of training for the random AE experiment is clearly reflected in results that are far noisier than the other experiments. The LS-AE, FH-AE, and NA-AE experiments show slight differences in the overall structures generated compared to the seed set, though there is no significant jump in structural complexity. This indicates that while novelty search is promoting diversity in the latent space, it does not guarantee diversity in the phenotype space and does not explicitly evolve towards desired qualities as in quality-diversity algorithms such as MAP-Elites [26].

VI. Discussion

In this paper we apply novelty search in the latent space (with basic constraints) for the challenge of creative building generation in Minecraft. We tested three different approaches to the transformation of the autoencoder which differ in which evolved content is used for training. Results showed that autoencoders that used more past data (either as novelty archive or as final individuals of many exploration phases) were the most robust and best performers in all reconstruction error tests. The autoencoder trained on the latest set of results, on the other hand, seemed to overfit to the data and performed poorly on unseen data. The baseline which skipped the transformation phase altogether performed respectably across all the reconstruction tests, indicating the general patterns produced by the CPPNs remain concise even in later stages of evolution. The latest set autoencoder (LS-AE) and full history autoencoder (FH-AE) experiments had the least diverse final populations in the phenotype space, but produced the most different results compared to the seed populations. The novelty archive autoencoder (NA-AE) experiment maintained a population diversity on par with the baselines while also increasing diversity from the seed dataset. Finally, the NA-AE produced a latent distance function with the best correlation to our voxel-based diversity measure.

This line of research has focused on the creativity of the proposed generator; however, the lack of additional constraints to govern the feasibility of buildings is clear in the examples generated during our experiments (see Fig. 5). This issue could be mitigated through constraints enforcing basic architectural qualities (e.g., stability, symmetry, interior space requirements) and using a feasible-infeasible two-population algorithm (FI-2POP) [47] to improve the realism of the output. Using this approach, the repair functions could also be converted to feasibility constraints to allow the CPPNs to generate lattices end-to-end without the need for repair. Another direction could be to seed the autoencoder or the initial population with pre-built structures (such as the medieval dataset used in this paper) to guide search towards house-like structures without imposing additional constraints and restricting creativity. Furthermore, the current approach does not create an appropriately designed interior space in the generated structures, simply carving out an enclosed volume and leaving it empty. This can be fixed with a simple rule-based generator to design the interior space or by incorporating a more complex interior generator into the pipeline to evolve the interior design in a creative fashion. Although the repair functions ensured that the buildings adhered to certain qualities, the current approach does not emphasize quality-diversity during evolution. Extending the current implementation with an algorithm such as MAP-Elites [26] could help ensure diversity is preserved in desired qualities (e.g., stability, size) besides just novelty. Beyond these extensions, it is important to test the generator on higher-resolution lattices, as the low resolution used in this paper is potentially limiting the visual impact of the output.

Latent vector evolution has most notably been applied to PCG for visuals [6,7,48], level content [3], robot behaviors [8], as well as data-efficient search space illumination [9]. To our knowledge, it has not been readily applied to constrained 3D structures, and so this paper opens up potential for further work in this domain. Our approach can also be applied to other facets within Minecraft and in new domains entirely. Broadly, it can be used in a mixed-initiative setting to promote creativity for level designers creating new content [17] by seeding the generator with human-designed content and generating novel suggestions. Within Minecraft, creative settlement generation (layout, adaptability, narrative) remains an open challenge presented by the GDMC report [35] which would benefit from open-ended evolution. Another interesting application would be generating agents with diverse/interesting behaviors which are evolved according to their surroundings. Our approach could also be adapted to other facets of computational creativity [16], such as the generation of audio files or game rules according to a set of constraints.

VII. Conclusion

In this paper we proposed an autonomous building generator for Minecraft targeting open-ended complexity and creativity. To achieve this the generator explores the problem space through CPPN-NEAT, evolving individuals according to novelty in the latent space, determined by a 3D autoencoder. The transformation phase retrains the autoencoder using high-performing (novel) examples from previous exploration phases to open up new areas of the search space and create more complex features. Whilst this work used very simple constraints for building generation, it provides a relatively unrestricted environment to test the creative capabilities of the generator. We tested various approaches for the transformation phase in order to identify its impact on population diversity and complexity over time, as well as the reconstruction accuracy of the autoencoders. Our results indicate that, by retraining the autoencoder, the generator is able to more effectively generate novel 3D structures compared to a static approach. The use of an autoencoder and CPPNs also ensures our approach is more scalable to higher resolution outputs, as the complexity of novelty search remains tied to the latent vector size. By addressing the limitations of our implementation, discussed above, we believe our approach can be an effective tool for generating diverse and creative content at a higher resolution, and with an open-ended complexity that is not currently achievable by the state of the art.

Acknowledgments

This project has received funding from the European Union's Horizon 2020 programme under grant agreement No 951911.

References

- J. Togelius, G. N. Yannakakis, K. O. Stanley, and C. Browne. "Search-based procedural content generation: A taxonomy and survey." IEEE Transactions Computational Intelligence AI Games, vol. 3, no. 3, pp. 172-186, 2011.

- J. Lehman and K. O. Stanley. "Novelty search and the problem with objectives." In Genetic programming theory practice IX, 2011.

- A. Liapis, H. P. Martínez, J. Togelius, and G. N. Yannakakis. "Transforming Exploratory Creativity with DeLeNoX." In Proceedings of the International Conference Computational Creativity, 2013.

- W. Banzhaf, B. Baumgaertner, G. Beslon, R. Doursat, J. A. Foster, B. M. De Melo, T. Miconi, L. Spector, S. Stepney, et al. "Defining and simulating open-ended novelty: Requirements, guidelines, and challenges." Theory Biosci., vol. 135, no. 3, pp. 131-161, 2016.

- C. Guckelsberger. Intrinsic motivation in computational creativity applied to videogames. Ph.D. dissertation, Queen Mary Univ. London, 2020.

- A. Hagg, S. Berns, A. Asteroth, S. Colton, and T. Bäck. "Expressivity of Parameterized and Data-Driven Representations in Quality Diversity Search." In Proceedings of the Genetic Evolutionary Computation Conference, 2021.

- A. Nguyen, J. Yosinski, and J. Clune. "Understanding innovation engines: Automated creativity and improved stochastic optimization via deep learning." Evolutionary Computation, vol. 24, no. 3, pp. 545-572, 2016.

- A. Cully. "Autonomous Skill Discovery with Quality-Diversity and Unsupervised Descriptors." In Proceedings of the Genetic Evolutionary Computation Conference, 2019.

- A. Gaier, A. Asteroth, and J.-B. Mouret. "Data-Efficient Design Exploration through Surrogate-Assisted Illumination." Evolutionary Computation, vol. 26, no. 3, pp. 381-410, 2018.

- Mojang. Minecraft. Game [PC], 2011.

- K. O. Stanley and R. Miikkulainen. "Evolving Neural Networks through Augmenting Topologies." Evolutionary Computation, vol. 10, no. 2, pp. 99–127, 2002.

- C. Salge, M. C. Green, R. Canaan, and J. Togelius. "Generative design in minecraft (GDMC) settlement generation competition." In Proceedings of the International Conference Foundations Digital Games, 2018.

- S. Colton, R. L. De Mántaras, and O. Stock. "Computational creativity: Coming of age." AI Magazine, vol. 30, no. 3, p. 11, 2009.

- G. A. Wiggins, G. Papadopoulos, S. Phon-Amnuaisuk, A. Tuson, and others. Evolutionary methods for musical composition. Univ. Edinburgh, Dept. Artif. Intelligence, 1998.

- F. Peinado and P. Gervás. "Evaluation of automatic generation of basic stories." New Generation Computing, vol. 24, no. 3, pp. 289-302, 2006.

- A. Liapis, G. N. Yannakakis, and J. Togelius. "Computational game creativity." In Proceedings of the International Conference Computational Creativity, 2014.

- G. N. Yannakakis, A. Liapis, and C. Alexopoulos. "Mixed-initiative co-creativity." In Proceedings of the Foundations Digital Games, 2014.

- G. N. Yannakakis and J. Togelius. Artificial intelligence and games. vol. 2. Springer, 2018.

- S. Colton and G. A. Wiggins. "Computational creativity: The final frontier?" In Proceedings of the European Conference Artificial Intelligence, 2012.

- T. Taylor. "Evolutionary innovations and where to find them: Routes to open-ended evolution in natural and artificial systems." Artificial life, vol. 25, no. 2, pp. 207-224, 2019.

- S. Stepney. "Modelling and measuring open-endedness." Artificial Life, vol. 25, no. 1, p. 9, 2021.

- H. F. Harlow. "Learning and satiation of response in intrinsically motivated complex puzzle performance by monkeys." J. comp. physiol. psych., vol. 43, no. 4, pp. 289, 1950.

- R. M. Ryan and E. L. Deci. "Intrinsic and extrinsic motivations: Classic definitions and new directions." Contemporary educational psychology, vol. 25, no. 1, pp. 54-67, 2000.

- G. N. Yannakakis and J. Togelius. "Experience-driven procedural content generation." IEEE Transactions Affective Computing, vol. 2, no. 3, pp. 147-161, 2011.

- D. Gravina, A. Khalifa, A. Liapis, J. Togelius, and G. N. Yannakakis. "Procedural content generation through quality diversity." In Proceedings of the IEEE Conference Games, 2019.

- J.-B. Mouret and J. Clune. "Illuminating search spaces by mapping elites." arXiv preprint arXiv:1504.04909, 2015.

- D. Gravina, A. Liapis, and G. Yannakakis. "Surprise search: Beyond objectives and novelty." In Proceedings of the Genetic Evolutionary Computation Conference, 2016.

- A. Summerville, S. Snodgrass, M. Guzdial, C. Holmgård, A. K. Hoover, A. Isaksen, A. Nealen, and J. Togelius. "Procedural content generation via machine learning (PCGML)." IEEE Transactions Games, vol. 10, no. 3, pp. 257-270, 2018.

- A. Summerville and M. Mateas. "Super mario as a string: Platformer level generation via lstms." arXiv preprint arXiv:1603.00930, 2016.

- R. Jain, A. Isaksen, C. Holmgård, and J. Togelius. "Autoencoders for level generation, repair, and recognition." In Proceedings of the ICCC workshop computational creativity games, 2016.

- V. Volz, J. Schrum, J. Liu, S. M. Lucas, A. Smith, and S. Risi. "Evolving mario levels in the latent space of a deep convolutional generative adversarial network." In Proceedings of the genetic evolutionary computation Conference, 2018.

- A. Liapis, G. N. Yannakakis, and J. Togelius. "Constrained Novelty Search: A Study on Game Content Generation." Evolutionary Computation, vol. 23, no. 1, pp. 101-129, 2015.

- T. Shu, J. Liu, and G. N. Yannakakis. "Experience-driven PCG via reinforcement learning: A Super Mario Bros study." In Proceedings of the IEEE Conference Games, 2021.

- S. Alvernaz and J. Togelius. "Autoencoder-augmented neuroevolution for visual doom playing." In Proceedings of the IEEE Conference Computational Intelligence Games, 2017.

- C. Salge, M. C. Green, R. Canaan, F. Skwarski, R. Fritsch, A. Brightmoore, S. Ye, C. Cao, and J. Togelius. "The AI Settlement Generation Challenge in Minecraft: First Year Report." KI-Künstliche Intelligenz, vol. 34, no. 1, pp. 19-31, 2020.

- C. Salge, C. Guckelsberger, M. C. Green, R. Canaan, and J. Togelius. "Generative design in minecraft: Chronicle challenge." In Proceedings of the 10th Int. Conference Computational Creativity, 2019.

- M. C. Green, C. Salge, and J. Togelius. "Organic building generation in minecraft." In Proceedings of the International Conference Foundations Digital Games, 2019.

- S. Sudhakaran, D. Grbic, S. Li, A. Katona, E. Najarro, C. Glanois, and S. Risi. "Growing 3D Artefacts and Functional Machines with Neural Cellular Automata." In Proceedings of the Conference Artificial Life, 2021.

- M. Awiszus, F. Schubert, and B. Rosenhahn. "World-GAN: a Generative Model for Minecraft Worlds." In Proceedings of the IEEE Conference Games, 2021.

- MCEdit: World Editor for Minecraft. Accessed Jan. 24, 2022. Available at: https://www.mcedit.net/.

- K. O. Stanley. "Compositional pattern producing networks: A novel abstraction of development." Genetic programming evolvable machines, vol. 8, no. 2, pp. 131-162, 2007.

- D. Gravina, A. Liapis, and G. N. Yannakakis. "Fusing novelty and surprise for evolving robot morphologies." In Proceedings of the Genetic Evolutionary Computation Conference, 2018.

- K. O. Stanley and R. Miikkulainen. "Evolving neural networks through augmenting topologies." Evolutionary Computation, vol. 10, no. 2, pp. 99-127, 2002.

- Z. Zhang and M. R. Sabuncu. "Generalized cross entropy loss for training deep neural networks with noisy labels." In Proceedings of the Neural Information Processing Systems Conference, 2018.

- S. M. Lucas and V. Volz. "Tile pattern kl-divergence for analysing and evolving game levels." In Proceedings of the Genetic Evolutionary Computation Conference, 2019.

- A. Brightmoore. AHousev5 MCEdit Filter. Accessed Jan. 24, 2022. Available at: http://www.brightmoore.net/mcedit-filters-1/ahouse.

- S. O. Kimbrough, G. J. Koehler, M. Lu, and D. H. Wood. "On a feasible--infeasible two-population (fi-2pop) genetic algorithm for constrained optimization: Distance tracing and no free lunch." Eur. J. Oper. Res., vol. 190, no. 2, pp. 310-327, 2008.

- M. Zammit, A. Liapis, and G. N. Yannakakis. "Seeding Diversity into AI Art." In Proceedings of the International Conference Computational Creativity, 2022.